AI Is Everywhere in Law — and 68% of Lawyers Trust It With Sensitive Data

The majority of legal professionals use AI today, and a new Rev study shows 68% trust closed-loop systems with their sensitive client data. Click for more insights into the legal AI landscape.

In just a few years, generative AI has gone from an experimental oddity to a critical tool for millions of people, including legal professionals. While AI can’t replace your best paralegal, it can fire off professional client emails and transcribe hours of witness testimony in a flash. In the end, it can save overworked lawyers a lot of headaches.

Our new data shows that 69% of legal professionals use AI-powered tools in their work at least occasionally. While there may be relatively little risk in using AI to send an email, lawyers often handle confidential client documents. But more than two-thirds (68%) of lawyers say they trust AI with sensitive client information.

Many are using tools specifically designed for legal professionals that protect client data. But some lawyers also say they trust tools that are designed for widespread use, like ChatGPT. These tools are great for quick questions and brainstorming, but they often have little transparency and relatively lax data security.

Our survey of over 500 legal professionals breaks down how attorneys and paralegals are using AI — and how secure tools can both save time and keep clients’ data safe.

Key Takeaways

- 69% of legal professionals use AI-powered tools at least occasionally. 24% use them daily.

- 68% trust AI tools with sensitive client information. They tend to trust programs designed for legal work more often, but 33% also trust generative AI tools like ChatGPT.

- 35% of legal professionals are most concerned about inaccurate or incomplete information when using AI tools in legal settings.

- When evaluating whether an AI vendor is secure enough, 56% of legal professionals say they want systems that do not use client data for training or external purposes.

More Than 2 in 3 Legal Professionals Use AI in Their Work

If you’re in the legal field, you’ve probably spent hundreds of hours poring over documents and recordings for trial prep. Today, many legal professionals have realized AI tools can condense all those files in the blink of an eye.

Our survey found that two-thirds of legal professionals use AI-powered tools at least occasionally, with 24% using them every single day. Those who haven’t jumped on board find themselves in the minority, as only 17% have never used legal AI tools at all.

Legal professionals need to be more careful than the average person when using AI at work. Attorneys have a legal and ethical duty to ensure their clients’ personal information is secure. If lawyers want to feed sensitive client data into a chatbot, they must first ensure it won’t be trained on the data and that the information couldn’t leak later.

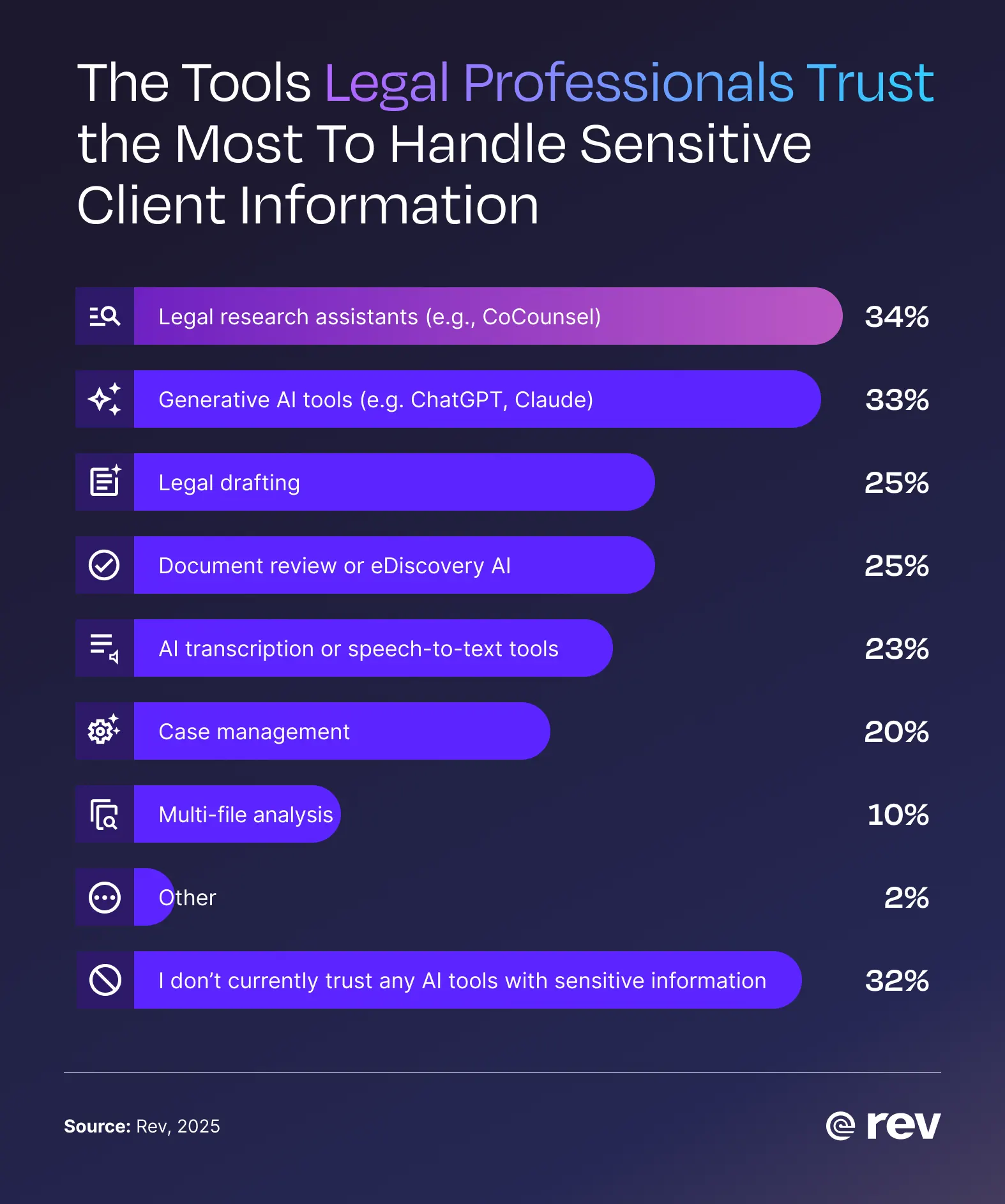

Most legal professionals (68%, specifically) trust some AI tools with sensitive client information. But they most commonly say they trust platforms that are specifically designed for legal work, like CoCounsel. Lawyers often say they trust other speciality programs too, like legal drafting (25%) and document review tools (25%). Also, 33% say they trust generative AI tools like ChatGPT, and 23% trust AI transcription or speech-to-text tools.

Concerns Remain as AI Runs Into Problems With Legal Jargon

While AI is growing more intelligent every day, it’s still capable of making errors. That’s a problem for lawyers, since even basic errors in a pleading or contract could have serious consequences for both the attorney and client.

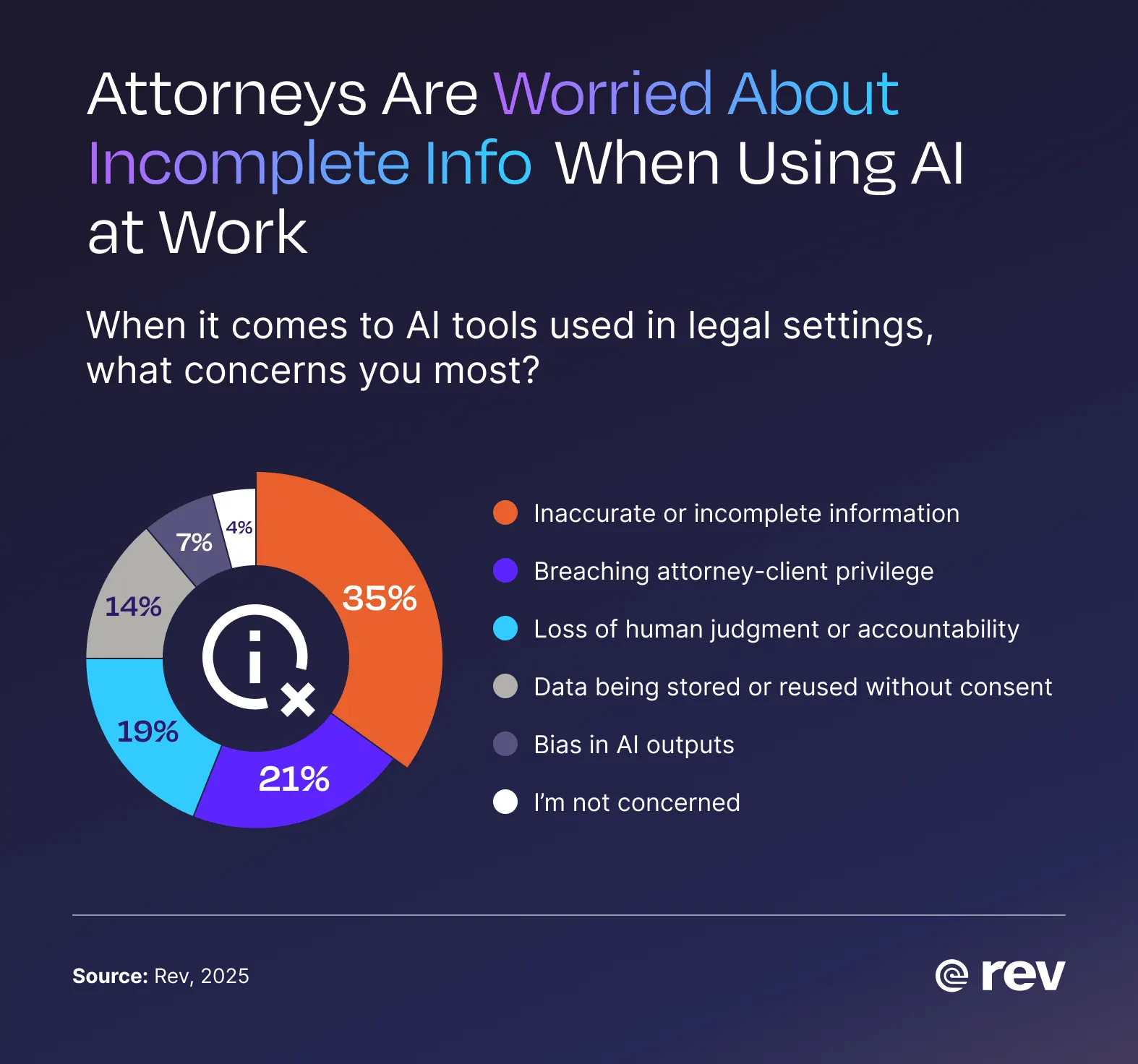

With that in mind, when it comes to using AI tools in legal settings, 35% of legal professionals say they’re most concerned about inaccurate or incomplete information. They’re also concerned about breaching attorney-client privilege (21%) and loss of human judgment or accountability (19%):

General AI platforms can struggle with complex legal topics. Many legal professionals (86%) have already noticed errors or inaccuracies when generating transcripts using AI in their work.

The reason why popular generative AI models, like ChatGPT, can give someone incorrect answers is that they predict text based on the data they're fed from all over the web. They’re trying to sound persuasive and might say something that sounds right but isn’t. That’s how AI hallucinates.

Vectara, an AI company that tracks hallucination rates, estimates that the latest ChatGPT 5.2 model hallucinates between 8% and 11% of the time. General-purpose AI models like ChatGPT weren’t designed for legal work, which need tools that will always get the details right.

Closed-loop AI can be better suited for legal documents because it only looks at the source material you provide. It’s not necessarily error-free, but it’s far less likely to hallucinate and will better understand legal jargon as it’s trained on data from your industry.

With that in mind, some legal professionals say their most common errors come from nuanced or firm-specific language (18%) or AI hallucinating case law (17%). Others cite complex legal terminology (15%) or errors caused by trying to transcribe multiple speakers talking at once (14%).

If you work in the legal field and you want to streamline your work with AI, you’ll want a closed-loop tool designed to work with legal jargon.

Overreliance on AI in Legal Work Could Be a Concern

When used correctly, AI can save a lot of time, and that’s a boon to law firms. Any lawyer can tell you that their job involves a lot more paperwork than it does high-stakes court proceedings.

In fact, organizing files and other low-level tasks can place a lot of strain on legal professionals. That’s especially true for lawyers early in their careers, who take on more of that work than seasoned attorneys.

We’ve published studies in the past on how most legal professionals face burnout. AI tools can make it much easier to do basic legal tasks, which give lawyers time back to focus on their clients. But despite the time savings, lawyers still have concerns about AI use in their industry.

More than half (58%) of legal professionals say overreliance on AI is a greater risk to legal integrity than under-reliance. Another 28% say both underreliance and overreliance threaten legal integrity in different ways.

The takeaway? Accurate, secure AI programs can save law firms a lot of time. But it’s important to remember that it’s a tool, just like any other software. It’s the combination of AI’s speed with human insight and judgement that provides the most accuracy and value.

AI Systems Must Be Transparent, Secure To Be Safe Enough for Lawyers

We’ve highlighted that lawyers have a legal and ethical responsibility to keep their clients’ data safe. Top-notch security is key to using AI effectively at the office and in the courtroom.

More than half (56%) of legal professionals say that when evaluating whether an AI vendor is secure enough, they’re most concerned about AI systems that do not store client data for training or external purposes.

They have a few other key concerns:

- Data handling transparency: 49%

- Meeting compliance certifications, like HIPAA, SOC 2, or CJIS: 43%

- Option for human review under confidentiality agreements: 39%

- Protected sharing ability: 34%

- Cost and efficiency: 28%

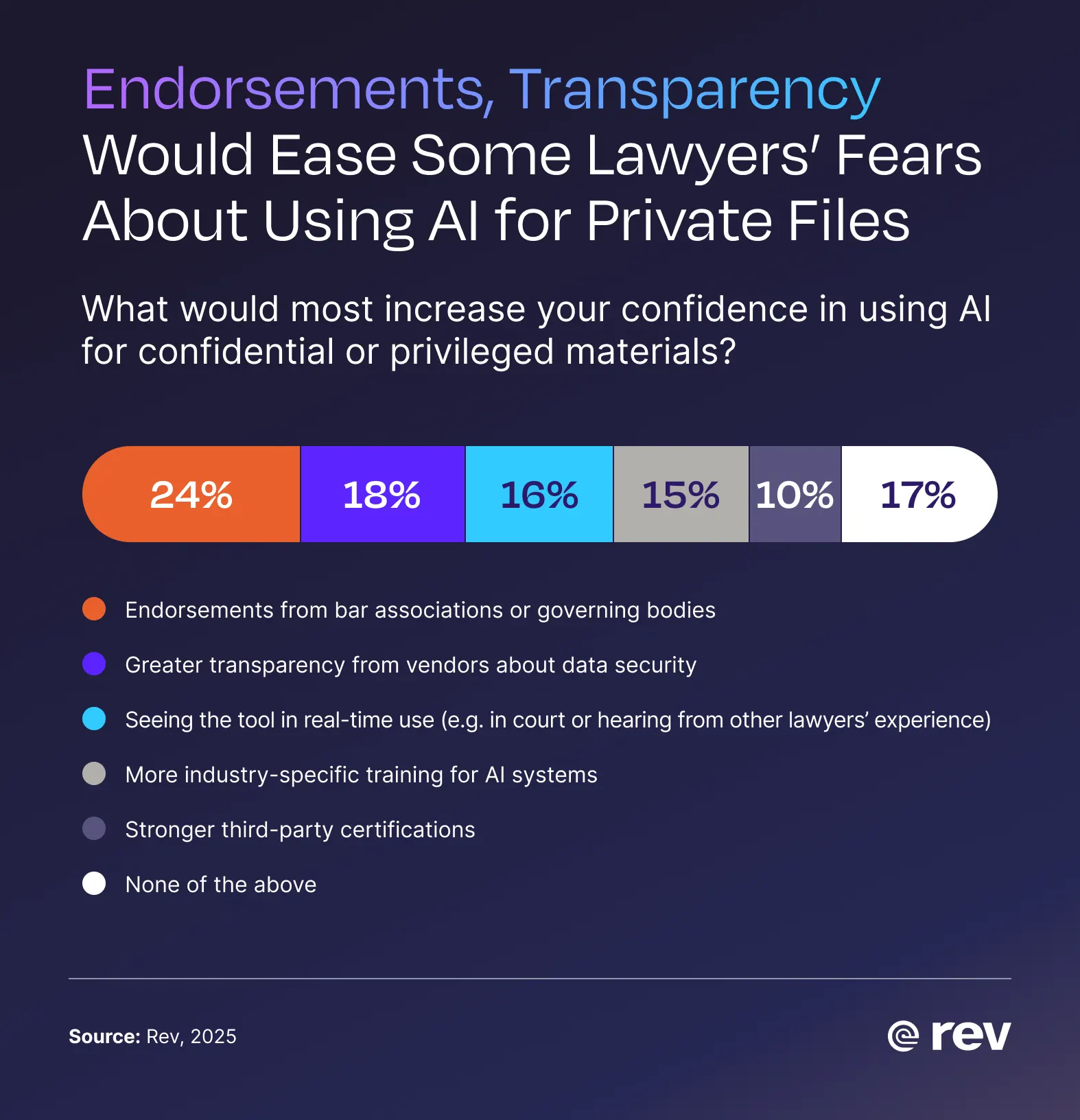

A trustworthy AI service will disclose how and why they use confidential client data. But legal professionals say an endorsement from bar associations and seeing the tool used in real-time would help, too:

Some legal tools, like Rev, disclose how they use data and already partner with major industry leaders, including the American Bar Association and the State Bar of Texas. Those organizations endorse reliable legal tools and often give discounts on paid services for members.

Keep Your Clients’ Stories Safe With Rev

Keeping attorney-client privilege while reducing hours and hours of tedious paperwork isn’t easy for anyone, let alone an AI service. But Rev is up to the challenge.

Rev is an industry leader in accurate, secure legal transcription and notetaking. We take attorney-client privilege as seriously as you do, and we never share your data with third parties. Let Rev take notes in client meetings, transcribe depositions, and draft case reports. You can focus your energy on helping your clients.

Methodology

The survey was conducted by Centiment between December 1 and December 12, 2025, among 520 legal professionals ages 18 and older. Respondents were screened to ensure they work in legal roles and have familiarity with evidence review processes. The survey included eight core questions and five demographic questions. Data is unweighted, and the margin of error is approximately ±3% for the overall sample at a 95% confidence level.